The computing group focuses on three core areas, including AI/ML, Scientific Workflows, and Data Infrastructure. Each project or application typically involves multiple members across all focus areas. A few short examples of projects are provided below under their most relative core area.

AI/ML and Analytical Tools

Artificial intelligence and machine learning (AI/ML) are an exciting focus area of the computing group, transforming experimental workflows by connecting materials synthesis with probes of electronic, chemical, and extrinsic sample properties. Rapid analysis of complex datasets unveils hidden patterns, rare events, and elusive correlations that improves beamline efficiency and user experience.

The AI/ML lead Tanny Chavez Esparza collaborates across Department Of Energy (DOE) user facilities to develop software and test out applications, and also regularly showcases advancements from the ALS at user meetings and conferences, such as the International Small Angle Scattering Conference. She provides significant contributions to various applications in MLExchange, Recently, with Alex Hexemer, Tanny helped kickstart MLXN 2025, a global workshop focused on machine learning for x-ray and neutron sources.

MLExchange

MLExchange is an open-source, web-based MLOps platform designed to bridge the gap in adopting machine learning (ML) for scientific discovery. The ALS Computing Group Lead Alex Hexemer acts as principal investigator for this multifacility initiative, collaborating with the Advanced Photon Source and Center for Nanoscale Materials (ANL), Linac Coherent Light Source (SLAC), National Synchrotron Light Source II (BNL), and Center for Nanophase Materials Sciences (ORNL) to integrate ML into experimental workflows. Beamline endstations at the ALS use various applications from MLExchange to transform workflows and empower users.

The initiative addresses key challenges in ML adoption—spanning model development, algorithm evaluation, data curation, and deployment. MLExchange streamlines ML workflows, enabling users to apply advanced analytics more effectively. By fostering collaboration and knowledge-sharing, it accelerates ML-driven insights while promoting reproducibility, interoperability, and accessibility across DOE facilities.

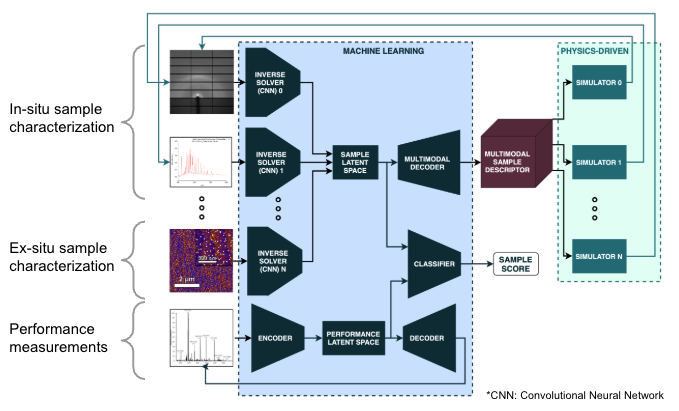

Physics Informed ML

Physics-Informed ML is a laboratory-directed research and development (LDRD) project that enhances the analysis of complex materials by integrating data from multiple experimental techniques. At the APXPS/APPES endstation (ALS Beamline 11.0.2), researchers study surface chemistry and structure under real-world conditions—critical for fields like catalysis and electrochemistry. Traditional methods struggle to combine insights from different measurements, but physics-informed ML makes crucial insights clear to scientists.

This project uses ML guided by physics principles to unify data from x-ray spectroscopy, scattering, and microscopy. By improving data integration and reducing errors, it enables more accurate and interpretable material characterization, leading to better-designed catalysts, batteries, and other advanced materials.

A key innovation is latent space extraction using variational autoencoders (VAEs), which estimate statistical distributions for each characterization method, enabling effective data integration across different length scales. Additionally, physics-informed loss functions guide learning through pre-defined solvers, enhancing interpretability and reducing overfitting.

Scientific Workflows and Visualization

As beamlines grow in complexity and features, the workflows that support them become critical for enabling coordination between beamlines and computational resources. Visualizations of the experimental system and ML models provide another necessary layer to provide end users intuitive and efficient feedback during coveted experiment time. This area is critical for both ongoing ALS work and future capabilities of ALS-U.

Scientific Workflows and Visualization Lead Wiebke Koepp develops data analysis and visualization workflows for synchrotron experiments, with a current focus on enabling autonomous experiments through real-time processing and decision-making tools. She collaborates across facilities on cross-cutting infrastructure efforts and recently presented the team’s and collaborators’ work at scattering-focused conferences, as well as user meeting workshops at DESY and the ALS.

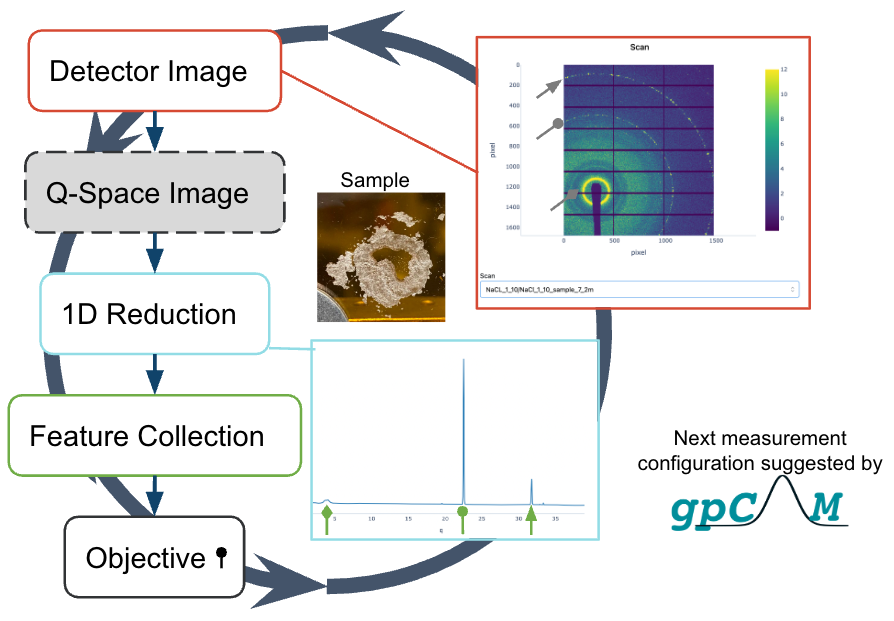

Illumine

The ILLUMINE (Intelligent Learning for Light Source and Neutron Source User Measurements including Navigation and Experiment Steering) project, led by SLAC and launched in FY23, spans five light sources and two neutron sources. It aims to develop capabilities for rapid data analysis and autonomous experiment steering, enabling scientists to optimize instrument configurations, efficiently leverage large datasets, and maximize the use of limited beam time.

A key contribution from the ALS is the development of web-accessible, user-friendly data presentation tools that help researchers evaluate results quickly and select the most effective analytical approaches.

ILLUMINE aligns with ongoing efforts to enhance computing capabilities at the National Energy Research Scientific Computing Center (NERSC) and integrate with ASCR IRI initiatives. Additionally, it builds on our facility’s collaboration with DESY, which is working to establish infrastructure for autonomous small- and wide-angle scattering experiments across multiple synchrotrons with varying computing and control environments.

Since the start of the project, several autonomous experiments testing infrastructure and visualization capabilities have been conducted at beamline 7.3.3, providing valuable insights into improving real-time experiment feedback and data-driven decision-making.

Components have been tested at: ALS 7.3.3, DESY P03, NSLS II 12-ID (SMI).

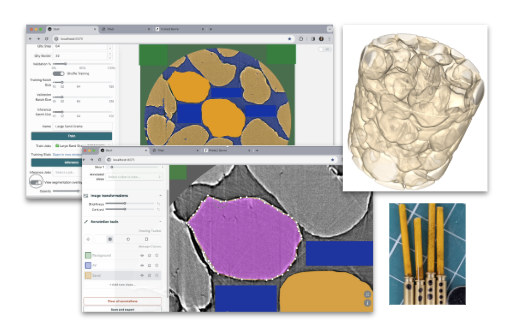

3D Visualization and Segmentation at Imaging Beamlines

The High-Resolution Image-Segmentation application, developed in collaboration with Plotly, provides an intuitive browser-based interface for easily defining segment classes on slices of reconstructed images, kicking off training and segmentation jobs, and reviewing results. The system leverages the DLSIA framework, which offers multiple machine learning network implementations for image segmentation, along with an intuitive API for tuning network architectures. Data is read from and written to the Tiled data service, ensuring a consistent and scalable interface for image and mask data.

To support preprocessing, a multi-resolution 3D region-of-interest (ROI) selection tool, developed in collaboration with Kitware, allows users to crop and visualize 3D volumes within Jupyter notebooks prior to segmentation. Together, these tools make advanced image analysis faster, more efficient, and more accessible to ALS users.

Data Infrastructure

Acting as a backbone for most projects in the Computing Group, data infrastructure must be robust enough to handle data hungry ML services and complex workflows that move large amounts of data between different beamlines to high performance computing centers. This is essentially important for the post ALS upgrade. Another key area of data infrastructure is working towards making data aligned with FAIR principles, ensuring data is findable, accessible, interoperable, and reusable.

Data Infrastructure lead Dylan McReynolds leads infrastructure projects related to data workflows, drawing from his previous industry experience as a software engineer to provide modern and scalable data solutions to the ALS. In addition to developing data management solutions for the ALS, McReynolds architects software deployments used by the computing group to run their software at other DOE user facilities. He also acts as a technical steering committee member for Bluesky, with a particular focus on Tiled, a data service maintained by the Bluesky project.

Splash Flows Globus

Splash Flows Globus is a comprehensive system for automating data movement workflows at Advanced Light Source (ALS) beamlines. The primary purpose of this system is to efficiently manage the complex process of transferring experimental data between various endpoints, including different storage systems, high-performance computing (HPC) facilities, long-term archival storage, while also managing experiment metadata. Without this system, extensive manual effort is often required to retrieve and analyze data sets between storage systems, reducing efficiency where it is needed most.

At the ALS Beamline 8.3.2 which performs microtomography (micro-CT), Splash Flows Globus automates the movement between raw tomography detector data and the supercomputing center NERSC where it is then rapidly transformed into 3D volumes. These volumes can then be visualized back at the beamline, providing end users with a fast and complete analysis lifecycle.

Radius Data Portal

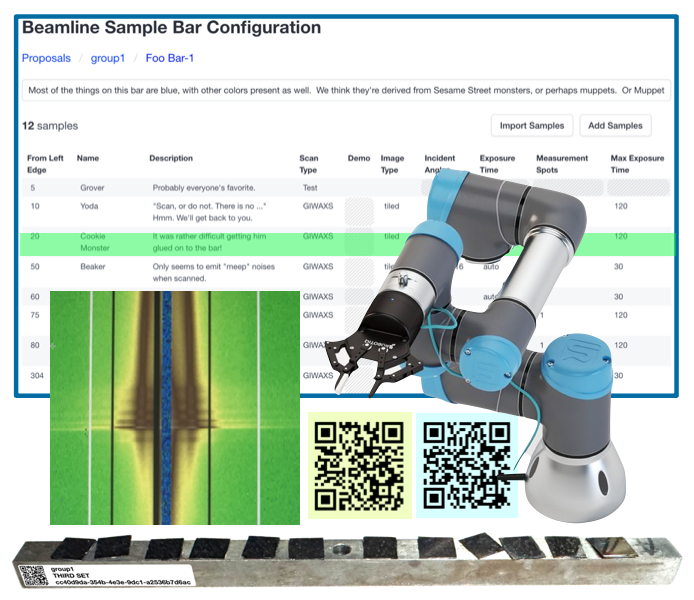

As part of the broader effort to improve automation across the whole data lifespan—from before the experiment begins to post-analysis and publication—we are contributing multiple software components to the Radius project at Beamline 7.3.3.

One of these is a browser-based sample metadata tracking application that scientists use to describe samples and specify their treatment at the beamline before arriving at the facility. This system is integrated with a QR-code-based sample tracking system, allowing users to physically label their samples during preparation so that beamline robots and technicians can identify and track them throughout the experiment and beyond.

The software and labeling system are both built with an eye towards generalization to other beamlines, and the eventual unification of sample tracking across the facility.

Bluesky Web

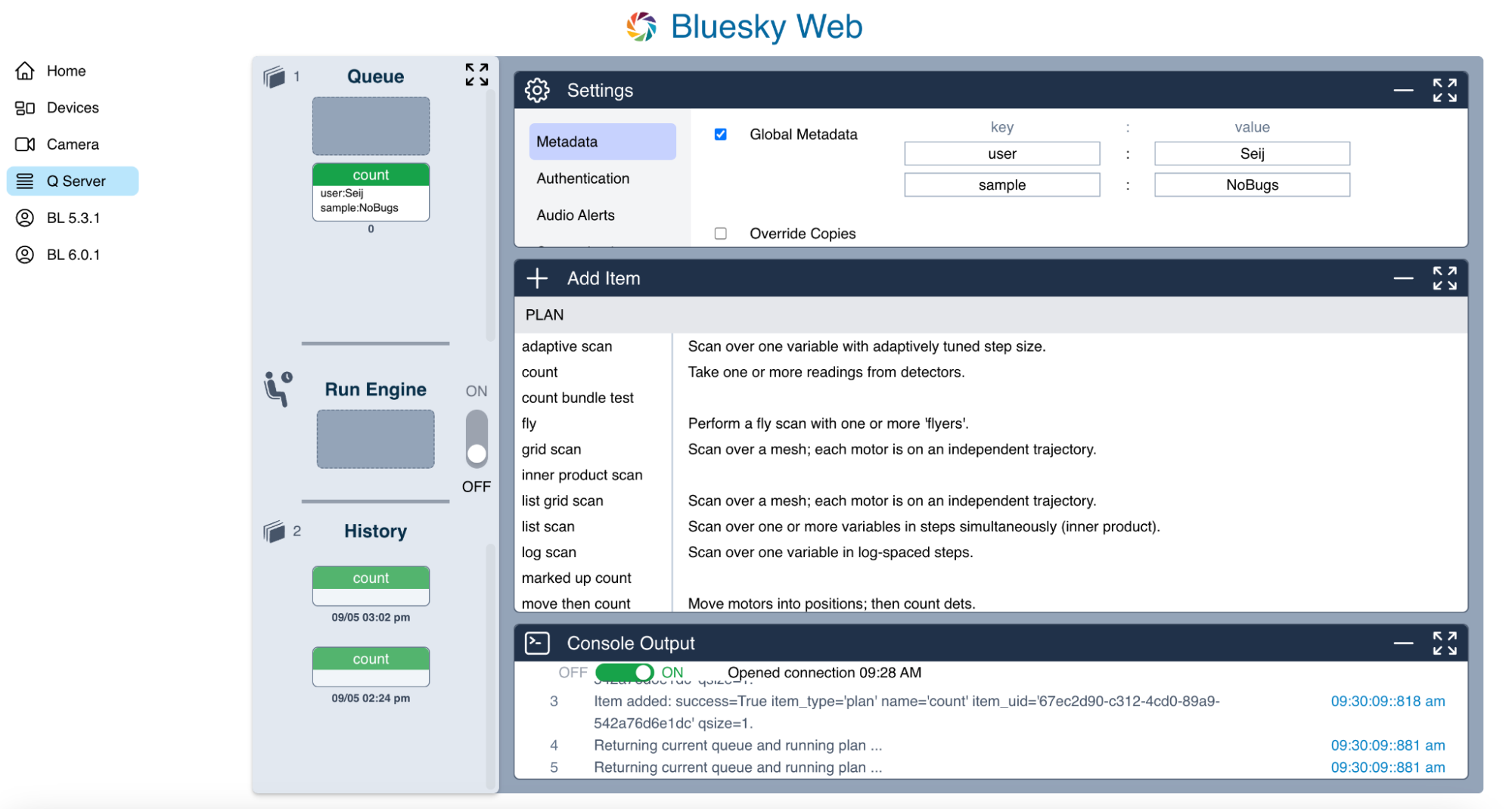

As part of the ALS-U upgrade, new beamlines will utilize Bluesky—an open source Python controls system developed by NSLS-II—and EPICS, a lower level controls system software.We have begun development of an open-source web application, Bluesky Web, that can control beamlines using Bluesky/EPICS while providing full controls and experiment functionality. This web application offers an efficient visual layer for end users operating beamlines, reducing onboarding time and enabling users to run complex experiments.

One feature of Bluesky Web, currently installed at Beamline 5.3.1, is a Queue Server interface. This allows users to run experiment plans at the beamline in a clearly defined system that coordinates multiple commands to prevent conflicts among concurrent users. Users can add various plans to the queue with automatic parameter validation and track their plans as they are executed in real time.