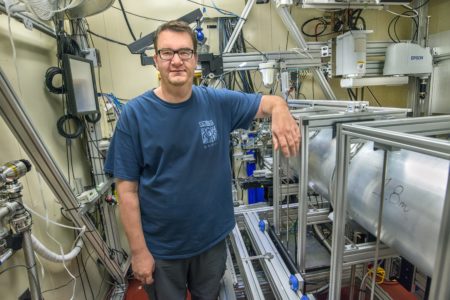

Alex Hexemer leads the newly formed Computing Program at the ALS. His previous experience as a postdoc and beamline scientist at the ALS has prepared him for the challenges and opportunities in computing for high volumes of data.

How has your career led you to where you are today?

After grad school in Santa Barbara with Ed Kramer doing polymer science, I applied for a postdoc here with Howard Padmore. We built a SAXS beamline, and then we started building an RSoXS beamline, which Cheng Wang now runs. When the SAXS beamline was running, people had problems with data. So, I started looking into solving analysis problems and got a DOE Early Career award, which then led to more computing.

What do you like about working at the ALS?

We just started this computing program, and it gives us the possibility to take the best ideas from around the world and build the best system. That’s fun. And, the enthusiasm all the way from management to the beamline scientists is exciting.

Several people at the ALS have been very interested in data for a long time, and that started several projects. Dula Parkinson and I are members of the Center for Advanced Mathematics for Energy Research Applications (CAMERA) to look at analysis. We started a project with Craig Tull, SPOT Suite, to move data from Dula’s, Nobumichi Tamura’s, and my beamlines from the ALS directly to the National Energy Research Scientific Computing Center (NERSC) to look at the data there. We plan to replace SPOT Suite with a production-ready system to apply to the high-data beamlines first, and eventually to every beamline at the ALS. We have limited resources, so we’re working with the Lab’s IT Division, NERSC, the Energy Sciences Network (ESnet), CAMERA, and the Molecular Foundry’s National Center for Electron Microscopy (NCEM).

What is your vision for the Computing Program?

I’m officially the only one in the group right now! We’re in the process of hiring somebody to do the moving of the data. Moving the data has two different types: We have regular beamlines where you move the data into storage and show the data there. We also have beamlines that require real-time processing, like COSMIC. So, you stream the data directly from the COSMIC beamline into a GPU cluster and reconstruct it. You use it like a microscope—it’s ptychography.

Ron Pandolfi, from CAMERA, developed a Xi-cam interface, a plugin software. When I’m running at the tomography beamline, I can use a scattering plugin. It actually runs at other facilities, like the CMS beamline at the National Synchrotron Light Source II (NSLS-II). The idea is that, if you have users on the SAXS/WAXS beamline here or the CMS beamline there, they have the same interface and analysis pipeline, so it’s familiar and streamlined. Xi-cam is what I envision people are going to use to access their data, because all the metadata will be in a database linked to the data and analysis. That’s the long-term vision.

Will this be applicable to users all over the world?

Yes. We worked with NSLS-II, the Advanced Photon Source (APS), with the Stanford Synchrotron Radiation Lightsource (SSRL), and the Linac Coherent Light Source (LCLS). We’re trying to coordinate between the synchrotrons, and everyone is contributing a piece. It’s a US-wide effort, and I think that’s exciting.

It won’t be done overnight, and it requires a lot of effort to come up with some initial ideas to present to the beamline scientists. I was a beamline scientist for many years. We want to make sure the beamline scientists are on board, that what we’re building is parallel to their work. We’ll help give them faster computer facilities, allowing remote users to get their data more easily. The amount of data growth is tremendous, so many beamline users can’t take their data home anymore. We’re supplying a way for them to analyze it here.

What do you do in your free time?

I have a 16-year-old daughter. That usually keeps me busy—family life is important. I like biking and photography—taking pictures at work and at home.